System Failure: 7 Shocking Causes and How to Prevent Them

Ever experienced a sudden crash when you needed your tech the most? That’s system failure in action—silent, sudden, and devastating. From hospitals to highways, when systems collapse, chaos follows. Let’s uncover why they fail and how we can stop them.

What Is System Failure?

At its core, a system failure occurs when a network, machine, software, or process stops functioning as intended. This breakdown can be temporary or permanent, partial or total. In technical terms, it’s the point at which a system can no longer deliver its expected output or service.

Defining System Failure in Modern Contexts

Today’s systems are more interconnected than ever. A system isn’t just a single computer or machine—it’s an ecosystem of hardware, software, human input, and environmental factors. When one component falters, the ripple effect can trigger a full-blown system failure. For instance, a single faulty sensor in a power grid can lead to cascading blackouts across regions.

- System failure can occur in mechanical, digital, biological, or organizational systems.

- Failures may be latent (hidden for long periods) or acute (immediate and visible).

- The impact varies from minor inconvenience to life-threatening emergencies.

“A system is only as strong as its weakest link.” — Often attributed to systems theorist Russell L. Ackoff, this quote captures the essence of systemic vulnerability.

Types of System Failures

Not all system failures are the same. They vary by origin, scope, and consequence. Understanding these types helps in diagnosing and preventing future breakdowns.

- Hardware Failure: Physical components like servers, circuits, or engines break down.

- Software Failure: Bugs, crashes, or logic errors cause programs to malfunction.

- Human Error: Mistakes in operation, configuration, or decision-making initiate failure.

- Environmental Failure: Natural disasters, temperature extremes, or power surges disrupt operations.

- Cascading Failure: One failure triggers a chain reaction, collapsing interdependent systems.

For example, the 2003 Northeast Blackout in the U.S. began with a software bug in an Ohio utility company’s system. That single flaw led to a cascading failure affecting 55 million people across eight states and parts of Canada. You can read more about this incident on the U.S.-Canada Power System Outage Task Force report.

Common Causes of System Failure

Behind every system failure lies a root cause—or often, a combination of them. Identifying these causes is the first step toward building resilient systems.

Poor Design and Engineering Flaws

Even the most advanced systems can fail if they’re built on shaky foundations. Design flaws—such as inadequate load capacity, poor redundancy, or flawed logic—can remain dormant until triggered by stress.

- The 1986 Challenger Space Shuttle disaster was caused by a faulty O-ring design that failed in cold weather.

- In software, poor architecture can lead to memory leaks or race conditions that crash applications under load.

- Design reviews and stress testing are essential to catch flaws early.

According to NASA’s accident investigation, the Challenger failure was not just technical but also organizational—pressure to launch overrode engineering concerns. More details are available at NASA’s official Challenger page.

Software Bugs and Glitches

In our digital age, software is the backbone of nearly every system. Yet, it’s also one of the most common sources of system failure. A single line of faulty code can bring down entire networks.

- The 2012 Knight Capital Group incident lost $440 million in 45 minutes due to a software deployment glitch.

- Buffer overflows, null pointer exceptions, and race conditions are frequent culprits.

- Automated testing, code reviews, and rollback mechanisms are critical safeguards.

“It’s not a bug, it’s a feature.” — A common joke among developers, but in reality, uncaught bugs can be catastrophic.

Hardware Degradation and Wear

Physical components degrade over time. Hard drives fail, circuits overheat, and mechanical parts wear out. Without proper monitoring and maintenance, hardware becomes a ticking time bomb.

- Data centers experience disk failures at a rate of 2-4% per year, according to Backblaze’s annual reports.

- Vibration, dust, and temperature fluctuations accelerate wear.

- Proactive replacement and predictive maintenance can prevent unexpected crashes.

Backblaze publishes real-time data on hard drive failure rates, which you can explore at Backblaze Blog.

System Failure in Critical Infrastructure

When system failure strikes essential services like power, water, or healthcare, the consequences can be life-threatening. These systems are designed with redundancy, yet they’re not immune to collapse.

Power Grid Failures

Electricity grids are complex networks balancing supply and demand in real time. A failure in one node can propagate across the system.

- The 2019 UK blackout affected 1.1 million people due to a lightning strike followed by gas turbine and wind farm disconnections.

- Smart grids use AI and sensors to detect anomalies, but they also introduce new cyber risks.

- Frequency instability and lack of inertia in renewable-heavy grids increase vulnerability.

The National Grid ESO’s post-event analysis is available at National Grid ESO.

Healthcare System Collapse

Hospitals rely on integrated systems for patient records, diagnostics, and life support. A system failure here can literally be a matter of life and death.

- In 2021, Ireland’s Health Service Executive (HSE) was crippled by a ransomware attack, shutting down IT systems for weeks.

- Backup generators failed during Hurricane Katrina, leading to patient evacuations and fatalities.

- Digital dependency without analog fallbacks increases risk.

The HSE cyberattack is detailed in a report by Irish Parliamentary Records.

Transportation Network Disruptions

From air traffic control to subway signaling, transportation systems are highly automated. A single failure can paralyze cities.

- In 2017, a software update caused London’s Underground signaling system to crash, delaying thousands.

- Air traffic control systems in the U.S. have faced outages due to aging infrastructure.

- Autonomous vehicles depend on flawless sensor and AI integration—any glitch risks safety.

The UK’s Rail Accident Investigation Branch (RAIB) published findings on signaling failures at RAIB Reports.

Human Factors in System Failure

Despite automation, humans remain central to system operation. Our decisions, habits, and limitations often play a key role in system failure.

Operator Error and Misjudgment

Even with advanced systems, human operators make critical decisions under pressure. Fatigue, stress, or lack of training can lead to mistakes.

- The 1979 Three Mile Island nuclear accident was worsened by operators misreading indicators.

- Pilots have overridden automated systems incorrectly, leading to crashes like Air France 447 in 2009.

- Clear interfaces, better training, and decision-support tools reduce error rates.

“Human error is not a cause; it’s a symptom of deeper systemic issues.” — Sidney Dekker, safety expert.

Organizational Culture and Communication Breakdown

Failures often stem not from individuals, but from organizational culture. Siloed teams, poor communication, and suppression of dissenting voices create blind spots.

- The Columbia Space Shuttle disaster (2003) was partly due to NASA’s culture discouraging engineers from raising concerns.

- In healthcare, miscommunication during shift changes leads to medication errors.

- Just culture models encourage reporting without fear of blame, improving safety.

NASA’s Columbia Accident Investigation Board report is accessible at NASA CAIB Report.

Training and Preparedness Gaps

Even the best systems fail if users aren’t trained to handle emergencies. Preparedness is not just about knowing what to do—it’s about practicing under stress.

- Simulations and drills improve response times during real failures.

- Checklists, like those used in aviation, reduce cognitive load in crises.

- Continuous learning and certification keep skills sharp.

The WHO recommends simulation-based training for healthcare teams, as noted in their Patient Safety Curriculum Guide.

Cybersecurity and System Failure

In the digital era, cyberattacks are a leading cause of system failure. Malware, ransomware, and phishing can disable systems in seconds.

Ransomware Attacks on Critical Systems

Ransomware encrypts data and demands payment for decryption. When it hits hospitals, utilities, or governments, the impact is immediate.

- The 2017 WannaCry attack affected over 200,000 computers in 150 countries, including the UK’s NHS.

- Backups and air-gapped systems are essential for recovery.

- Zero-trust security models minimize lateral movement by attackers.

The National Cyber Security Centre (NCSC) details WannaCry’s impact at NCSC UK.

Insider Threats and Data Breaches

Not all threats come from outside. Employees or contractors with access can intentionally or accidentally cause system failure.

- Edward Snowden’s 2013 leak exposed vulnerabilities in NSA’s access controls.

- Privileged access management (PAM) limits what users can do.

- User behavior analytics (UBA) detect anomalous activity.

The U.S. Cybersecurity and Infrastructure Security Agency (CISA) offers guidelines on insider threats at CISA Insider Threat.

Supply Chain Vulnerabilities

Modern systems rely on third-party software and hardware. A compromise in one link can infect the entire chain.

- The 2020 SolarWinds hack inserted malware into a software update, affecting U.S. agencies and corporations.

- Vendors must adhere to strict security standards and audits.

- Software Bill of Materials (SBOM) helps track components and vulnerabilities.

CISA’s response to SolarWinds is documented at CISA SolarWinds.

Preventing System Failure: Best Practices

While we can’t eliminate all risks, we can drastically reduce the likelihood and impact of system failure through proactive strategies.

Redundancy and Failover Mechanisms

Redundancy means having backup components that take over when the primary fails. It’s a cornerstone of resilient design.

- Data centers use redundant power supplies, cooling, and network paths.

- Aircraft have multiple flight control systems.

- Failover systems must be tested regularly to ensure they work when needed.

“Redundancy is not duplication; it’s intelligent backup.” — Engineering principle in high-availability systems.

Regular Maintenance and Monitoring

Preventive maintenance catches issues before they escalate. Continuous monitoring provides real-time insights into system health.

- IoT sensors track temperature, vibration, and performance metrics.

- Predictive analytics use machine learning to forecast failures.

- Scheduled audits and patching keep systems secure and stable.

IBM’s Maximo platform offers predictive maintenance solutions, detailed at IBM Maximo.

Disaster Recovery and Business Continuity Planning

When failure happens, having a recovery plan minimizes downtime and data loss.

- Recovery Time Objective (RTO) and Recovery Point Objective (RPO) define acceptable downtime and data loss.

- Cloud backups and geographically distributed data centers enhance resilience.

- Regular drills ensure teams know their roles during crises.

The Federal Emergency Management Agency (FEMA) provides business continuity templates at FEMA Business Continuity.

Case Studies of Major System Failures

History is filled with system failures that teach us valuable lessons. Let’s examine a few landmark cases.

The 2003 Northeast Blackout

On August 14, 2003, a software bug in FirstEnergy’s alarm system failed to alert operators to a transmission line overload. Within minutes, cascading failures shut down power across the Northeast U.S. and Ontario.

- Over 50 million people were affected.

- Root cause: Inadequate system monitoring and poor communication.

- Result: New reliability standards and mandatory grid audits.

Full report: U.S.-Canada Power System Outage Task Force.

Therac-25 Radiation Therapy Machine

In the 1980s, the Therac-25 medical device delivered lethal radiation doses due to a software race condition. At least six patients were severely injured or killed.

- Root cause: Poor software design and lack of hardware safety interlocks.

- Developers ignored user reports of malfunctions.

- Legacy: A landmark case in software safety engineering.

Detailed analysis: Virginia Tech Therac-25 Case Study.

Facebook’s 2021 Global Outage

On October 4, 2021, Facebook, Instagram, and WhatsApp went offline for nearly six hours due to a BGP (Border Gateway Protocol) misconfiguration.

- Internal tools and communication systems also failed.

- Engineers couldn’t access servers remotely due to security design.

- Loss: Over $60 million in ad revenue and massive reputational damage.

Facebook’s engineering post-mortem: Meta Engineering Blog.

The Future of System Resilience

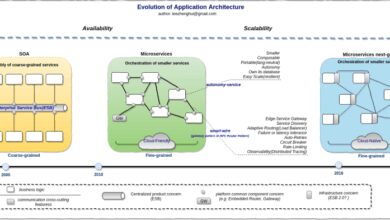

As systems grow more complex, so must our strategies for preventing failure. The future lies in adaptive, self-healing, and intelligent systems.

AI and Machine Learning in Failure Prediction

AI can analyze vast datasets to detect patterns that humans miss. Machine learning models predict failures before they happen.

- Google uses AI to optimize data center cooling and prevent overheating.

- Predictive maintenance in manufacturing reduces downtime by up to 50%.

- Challenges include data quality, model transparency, and false positives.

Google’s DeepMind AI for energy efficiency: DeepMind Blog.

Self-Healing Systems and Autonomous Recovery

Next-gen systems can detect, diagnose, and fix problems without human intervention.

- Autonomous networks reroute traffic during outages.

- Self-repairing software patches vulnerabilities in real time.

- Blockchain-based systems maintain integrity even under attack.

“The best system doesn’t just fail safely—it recovers itself.” — Vision of autonomous infrastructure.

Building a Culture of Resilience

Technology alone isn’t enough. Organizations must foster a culture that values transparency, learning, and continuous improvement.

- Blame-free post-mortems encourage honest reporting.

- Cross-functional teams improve system understanding.

- Resilience is not a project—it’s a mindset.

The DevOps movement emphasizes shared responsibility and rapid feedback loops, as outlined at DevOps.com.

What is the most common cause of system failure?

The most common cause of system failure is a combination of human error and poor system design. While technical flaws like software bugs or hardware malfunctions play a role, they are often exacerbated by inadequate training, communication breakdowns, or organizational pressures that override safety protocols.

How can organizations prevent system failure?

Organizations can prevent system failure by implementing redundancy, conducting regular maintenance, training staff, adopting robust cybersecurity practices, and creating disaster recovery plans. A culture of transparency and continuous learning is also essential for identifying and addressing risks early.

What is a cascading system failure?

A cascading system failure occurs when the failure of one component triggers the failure of subsequent components, leading to a widespread collapse. This is common in interconnected systems like power grids or financial networks, where dependencies amplify the initial fault.

Can AI prevent system failure?

Yes, AI can help prevent system failure by analyzing data to predict issues before they occur, automating responses, and optimizing system performance. However, AI systems themselves can fail if not properly designed, monitored, and validated.

What should you do during a system failure?

During a system failure, follow established protocols: switch to backup systems if available, notify technical teams, communicate with stakeholders, and document the incident for post-mortem analysis. Avoid making rushed decisions without assessing the full impact.

System failure is not a matter of if, but when. From engineering flaws to cyberattacks, the triggers are diverse, but the lessons are clear: resilience must be designed in from the start. By understanding the causes, learning from past failures, and investing in redundancy, monitoring, and culture, we can build systems that don’t just survive—but adapt and recover. The future belongs to those who prepare, not just react.

Further Reading: